As I’ve mentioned earlier, I’ve been working quite a lot with AngularJS lately, most recently on a search function on a website. Naturally, since this is an ajax application, the search result page never reloads when I perform a search. Never the less, I would like to

- Be able to go back and forth between my searches with the standard browser functions

- See my new query in the location bar

- Reload the page and have the latest query - not the initial one - execute again

- Make this invisible to the user, that means no hashbangs - only a nice

?query=likethis.

Fortunately, HTML5 is there for me, with the history api! This is supported in recent versions of Chrome, Firefox and Safari, as well as in Internet Explorer 10. Unfortunately, no support in IE9 or earlier. Anyway, in AngularJS, we don’t want to access the history object directly, but rather use the $location abstraction.

The first thing we need to to is set AngularJS to use HTML5 mode for $location. This changes the way $location works, from the default hash mode to querystring mode.

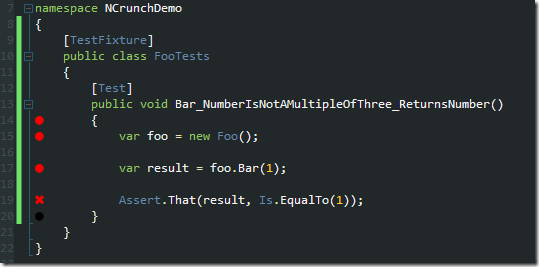

1 | angular.module('Foobar', []) |

Note: Setting html5Mode to true seems to cause problems in browsers that doesn’t support it, even though it’s supposed to just ignore it and use the default mode. So it might be a good idea to check for support before turning it on, for example by checking Modernizr.history.

Now, all I have to do to whenever I perform a search is to update the location to reflect the new query.

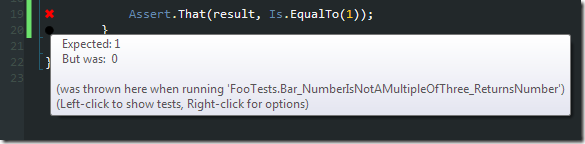

1 | $scope.search = function() { |

This makes the querystring change when I perform a search, and it also takes care of the reloading of the page. It does not, however make the back and forward button work. Sure, the location changes when you click back, but the query isn’t actually performed again. In order to make this work, you need to do some work when the location changes.

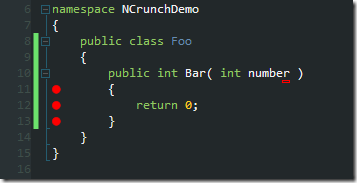

In plain javascript, you would add a listener to the popstate event. But, you know, AngularJS and all that, we wan’t to use the $location abstraction. So instead, we create a [$watch](http://docs.angularjs.org/api/ng.$rootScope.Scope#$watch) that checks for changes in $location.url().

1 | $scope.$watch(function () { return $location.url(); }, function (url) { |

And that’s pretty much it! Now you can step back and forth in history with the browser buttons, and have AngularJS perform the search correctly every time!